SQL Interview Questions and answers – 2021

1. What is DBMS?

A Database Management System (DBMS) is a program that controls creation, maintenance and use of a database. DBMS can be termed as File Manager that manages data in a database rather than saving it in file systems.

A Database Management System (DBMS) is a software application that interacts with the user, applications, and the database itself to capture and analyze data. A database is a structured collection of data.

A DBMS allows a user to interact with the database. The data stored in the database can be modified, retrieved and deleted and can be of any type like strings, numbers, images, etc.

There are two types of DBMS:

- Relational Database Management System: The data is stored in relations (tables). Example – MySQL.

- Non-Relational Database Management System: There is no concept of relations, tuples and attributes. Example – MongoDB

2. What is RDBMS?

RDBMS stands for Relational Database Management System. RDBMS store the data into the collection of tables, which is related by common fields between the columns of the table. It also provides relational operators to manipulate the data stored into the tables.

3. What is SQL?

SQL stands for the Structured Query Language. SQL is a standard query language used for maintaining the relational database and perform many different operations of data manipulation on the data. SQL initially was invented in 1970. It is a database language used for database creation, deletion, fetching rows and modifying rows, etc. sometimes it is pronounced as ‘sequel.’

4. What are the usages of SQL?

- SQL is responsible for maintaining the relational data and the data structures present in the database.

- To execute queries against a database

- To retrieve data from a database

- To inserts records in a database

- To updates records in a database

- To delete records from a database

- To create new databases

- To create new tables in a database

- To create views in a database

- To perform complex operations on the database.

5. Does SQL support programming?

SQL refers to the Standard Query Language, which is not actually the programming language. SQL doesn’t have a loop, Conditional statement, logical operations, it can not be used for anything other than data manipulation. It is used like commanding (Query) language to access databases. The primary purpose of SQL is to retrieve, manipulate, update and perform complex operations like joins on the data present in the database.

6. What do you mean by table and field in SQL?

A table refers to a collection of data in an organised manner in form of rows and columns. A field refers to the number of columns in a table.

For example:

Table:StudentInformation

Field: Stu Id, Stu Name, Stu Marks

7. What are joins in SQL?

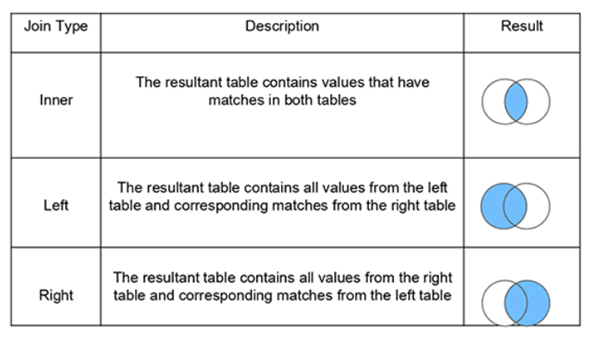

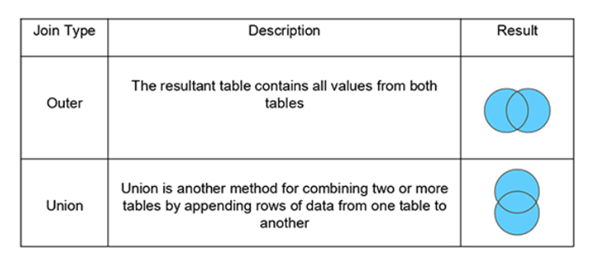

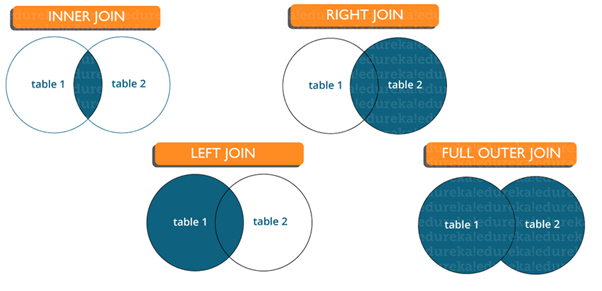

A JOIN clause is used to combine rows from two or more tables, based on a related column between them. It is used to merge two tables or retrieve data from there. There are 4 types of joins, as you can refer to below:

Inner join: Inner Join in SQL is the most common type of join. It is used to return all the rows from multiple tables where the join condition is satisfied.

Left Join: Left Join in SQL is used to return all the rows from the left table but only the matching rows from the right table where the join condition is fulfilled.

Right Join: Right Join in SQL is used to return all the rows from the right table but only the matching rows from the left table where the join condition is fulfilled.

Full Join: Full join returns all the records when there is a match in any of the tables. Therefore, it returns all the rows from the left-hand side table and all the rows from the right-hand side table.

8. What is a Database?

Database is nothing but an organized form of data for easy access, storing, retrieval and managing of data. This is also known as structured form of data which can be accessed in many ways.

Example: School Management Database, Bank Management Database.

9. What is a primary key?

A primary key is a combination of fields which uniquely specify a row. This is a special kind of unique key, and it has implicit NOT NULL constraint. It means, Primary key values cannot be NULL.

10. What is a unique key?

A Unique key constraint uniquely identified each record in the database. This provides uniqueness for the column or set of columns.

A Primary key constraint has automatic unique constraint defined on it. But not, in the case of Unique Key.

There can be many unique constraint defined per table, but only one Primary key constraint defined per table.

11. What is a foreign key?

A foreign key is one table which can be related to the primary key of another table. Relationship needs to be created between two tables by referencing foreign key with the primary key of another table.

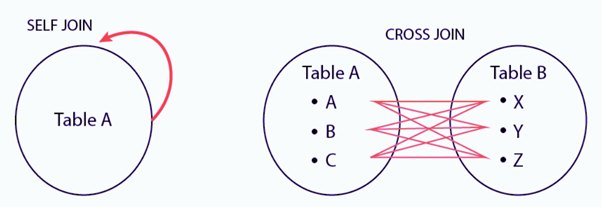

12. What is a Self-Join?

A self JOIN is a case of regular join where a table is joined to itself based on some relation between its own column(s). Self-join uses the INNER JOIN or LEFT JOIN clause and a table alias is used to assign different names to the table within the query.

SELECT A.emp_id AS "Emp_ID",A.emp_name AS "Employee",

B.emp_id AS "Sup_ID",B.emp_name AS "Supervisor"

FROM employee A, employee B

WHERE A.emp_sup = B.emp_id;13. What is a Cross-Join?

Cross join can be defined as a cartesian product of the two tables included in the join. The table after join contains the same number of rows as in the cross-product of number of rows in the two tables. If a WHERE clause is used in cross join then the query will work like an INNER JOIN.

SELECT stu.name, sub.subject

FROM students AS stu

CROSS JOIN subjects AS sub;

14. What is the difference between CHAR and VARCHAR2 datatype in SQL?

Both Char and Varchar2 are used for characters datatype but varchar2 is used for character strings of variable length whereas Char is used for strings of fixed length. For example, char(10) can only store 10 characters and will not be able to store a string of any other length whereas varchar2(10) can store any length i.e 6,8,2 in this variable.

15. What are Constraints?

Constraints in SQL are used to specify the limit on the data type of the table. It can be specified while creating or altering the table statement. The sample of constraints are:

- NOT NULL

- CHECK

- DEFAULT

- UNIQUE

- PRIMARY KEY

- FOREIGN KEY

16. What is the difference between DELETE and TRUNCATE statements?

| DELETE | TRUNCATE |

| Delete command is used to delete a row in a table. | Truncate is used to delete all the rows from a table. |

| You can rollback data after using delete statement. | You cannot rollback data. |

| It is a DML command. | It is a DDL command. |

| It is slower than truncate statement. | It is faster. |

17. What do you mean by data integrity?

Data Integrity defines the accuracy as well as the consistency of the data stored in a database. It also defines integrity constraints to enforce business rules on the data when it is entered into an application or a database.

18. What is an Index?

An index refers to a performance tuning method of allowing faster retrieval of records from the table. An index creates an entry for each value and hence it will be faster to retrieve data.

CREATE INDEX index_name /* Create Index */

ON table_name (column_1, column_2);

DROP INDEX index_name; /* Drop Index */19. Explain different types of index in SQL.

There are three types of index in SQL namely:

Unique Index:

This index does not allow the field to have duplicate values if the column is unique indexed. If a primary key is defined, a unique index can be applied automatically.

CREATE UNIQUE INDEX myIndex

ON students (enroll_no);Clustered Index:

This index reorders the physical order of the table and searches based on the basis of key values. Each table can only have one clustered index.

Non-Clustered Index:

Non-Clustered Index does not alter the physical order of the table and maintains a logical order of the data. Each table can have many nonclustered indexes.

20. What is a Data Definition Language?

Data definition language (DDL) is the subset of the database which defines the data structure of the database in the initial stage when the database is about to be created. It consists of the following commands: CREATE, ALTER and DELETE database objects such as schema, tables, view, sequence, etc.

21. What is a Data Manipulation Language?

Data manipulation language makes the user able to retrieve and manipulate data. It is used to perform the following operations.

- Insert data into database through INSERT command.

- Retrieve data from the database through SELECT command.

- Update data in the database through UPDATE command.

- Delete data from the database through DELETE command.

22. What is Data Control Language?

Data control language allows you to control access to the database. DCL is the only subset of the database which decides that what part of the database should be accessed by which user at what point of time. It includes two commands GRANT and REVOKE.

GRANT: to grant the specific user to perform a particular task

REVOKE: to cancel previously denied or granted permissions.

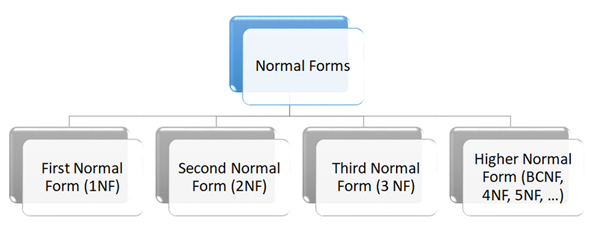

23. What is Normalization in SQL?

Normalization is used to decompose a larger, complex table into simple and smaller ones. This helps us in removing all the redundant data.

Generally, in a table, we will have a lot of redundant information which is not required, so it is better to divide this complex table into multiple smaller tables which contains only unique information.

Let’s look at the rules for a table to be in first normal form, second normal form and third normal form:

First normal form:

- A relation schema is in 1NF, if and only if:

- All attributes in the relation are atomic(indivisible value)

- There are no repeating elements or group of elements.

Second normal form:

- A relation is said to be in 2NF, if and only if:

- It is in 1st Normal Form.

- No partial dependency exists between non-key attributes and key attributes.

Third Normal form:

- A relation R is said to be in 3NF if and only if:

- It is in 2NF.

- No transitive dependency exists between non-key attributes and key attributes through another non-key attribute.

24. What is pl sql?

PL SQL stands for Procedural language constructs for Structured Query Language. PL SQL was introduced by Oracle to overcome the limitations of plain sql. So, pl sql adds in procedural language approach to the plain vanilla sql.

While, with the help of sql, we were able to DDL and DML queries, with the help of PL SQL, we will be able to create functions, triggers and other procedural constructs.

25. What is ETL in SQL?

ETL stands for Extract, Transform and Load. It is a three step process, where we would have to start off by extracting the data from sources. Once we collate the data from different sources, what we have is raw data. This raw data has to be transformed into tidy format, which will come in the second phase. Finally, we would have to load this tidy data into tools which would help us to find insights.

26. What is OLAP?

OLAP stands for Online Analytical Processing. And a class of software programs which are characterized by relatively low frequency of online transactions. Queries are often too complex and involve a bunch of aggregations.

27. What is a “TRIGGER” in SQL?

- A trigger allows you to execute a batch of SQL code when an insert, update or delete command is run against a specific table as TRIGGER is said to be the set of actions that are performed whenever commands like insert, update or delete are given through queries.

- The trigger is said to be activated when these commands are given to the system.

- Triggers are the particular type of stored procedures that are defined to execute automatically in place or after data modifications.

- Triggers are generated using CREATE TRIGGER statement.

28. What is ACID property in a database?

ACID property is used to ensure that the data transactions are processed reliably in a database system.

A single logical operation of a data is called transaction.

ACID is an acronym for Atomicity, Consistency, Isolation, Durability.

Atomicity: it requires that each transaction is all or nothing. It means if one part of the transaction fails, the entire transaction fails and the database state is left unchanged.

Consistency: the consistency property ensure that the data must meet all validation rules. In simple words you can say that your transaction never leaves your database without completing its state.

Isolation: this property ensure that the concurrent property of execution should not be met. The main goal of providing isolation is concurrency control.

Durability: durability simply means that once a transaction has been committed, it will remain so, come what may even power loss, crashes or errors.

29. What are the syntax and use of the COALESCE function?

The syntax of COALESCE function:

COALESCE(exp1, exp2, …. expn)

The COALESCE function is used to return the first non-null expression given in the parameter list.

30. What is the SQL query to display the current date?

There is a built-in function in SQL called GetDate() which is used to return the current timestamp.

31. Are NULL values same as that of zero or a blank space?

A NULL value is not at all same as that of zero or a blank space. NULL value represents a value which is unavailable, unknown, assigned or not applicable whereas a zero is a number and blank space is a character.

32. What is subquery in SQL?

A subquery is a query inside another query where a query is defined to retrieve data or information back from the database. In a subquery, the outer query is called as the main query whereas the inner query is called subquery. Subqueries are always executed first and the result of the subquery is passed on to the main query. It can be nested inside a SELECT, UPDATE or any other query. A subquery can also use any comparison operators such as >,< or =.

33. What is the difference between ‘HAVING’ CLAUSE and a ‘WHERE’ CLAUSE?

HAVING clause can be used only with SELECT statement. It is usually used in a GROUP BY clause and whenever GROUP BY is not used, HAVING behaves like a WHERE clause.

Having Clause is only used with the GROUP BY function in a query whereas WHERE Clause is applied to each row before they are a part of the GROUP BY function in a query.

34. How can you fetch first 5 characters of the string?

There are a lot of ways to fetch characters from a string. For example:

Select SUBSTRING(StudentName,1,5) as studentname from student.

35. What is a View?

A view is a virtual table which consists of a subset of data contained in a table. Since views are not present, it takes less space to store. View can have data of one or more tables combined and it depends on the relationship.

36. What are Views used for?

A view refers to a logical snapshot based on a table or another view. It is used for the following reasons:

- Restricting access to data.

- Making complex queries simple.

- Ensuring data independence.

- Providing different views of same data.

37. What is a Stored Procedure?

A Stored Procedure is a function which consists of many SQL statements to access the database system. Several SQL statements are consolidated into a stored procedure and execute them whenever and wherever required which saves time and avoid writing code again and again.

38. List some advantages and disadvantages of Stored Procedure?

Advantages:

A Stored Procedure can be used as a modular programming which means create once, store and call for several times whenever it is required. This supports faster execution. It also reduces network traffic and provides better security to the data.

Disadvantage:

The only disadvantage of Stored Procedure is that it can be executed only in the database and utilizes more memory in the database server.

39. What is Auto Increment in SQL?

Autoincrement keyword allows the user to create a unique number to get generated whenever a new record is inserted into the table.

This keyword is usually required whenever PRIMARY KEY in SQL is used.

40. What is a Datawarehouse?

Datawarehouse refers to a central repository of data where the data is assembled from multiple sources of information. Those data are consolidated, transformed and made available for the mining as well as online processing. Warehouse data also have a subset of data called Data Marts.

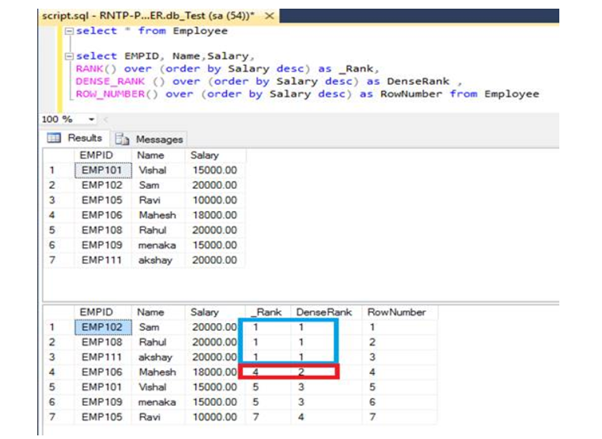

41. What is RANK, DANCE_RANK and ROW_NUMBER Functions?

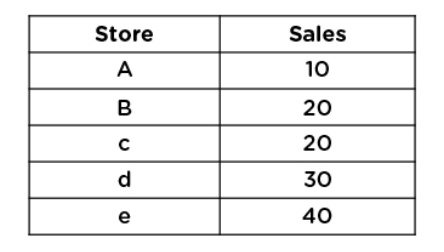

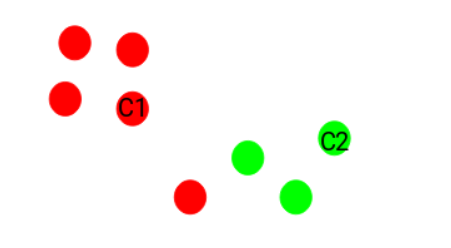

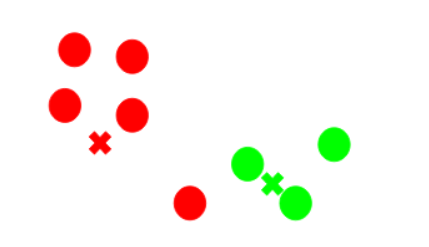

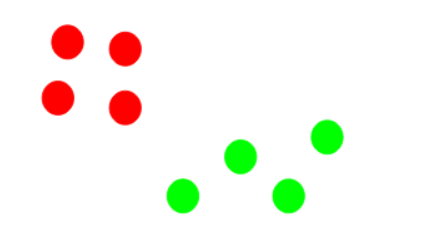

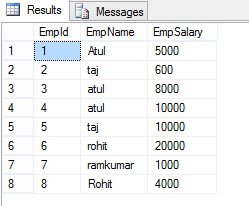

Lets understand all terms with following example:

Here I have an Employe table, the following is the sample data for the Employe Table.

ROW_NUMBER() Function with Partition By clause:

Row_number plays a very important role in SQL server. Row_Number function can help to perform more complex ordering of row in the report format that allow the over clause in SQL standard.

Syntax

ROW_NUMBER () OVER ([PARTITION BY value_exp, … [ n ]] ORDER BY_clause)

If we want to add row number to each group, partition by help to reset for every group, let’s take a look at the Employe table.

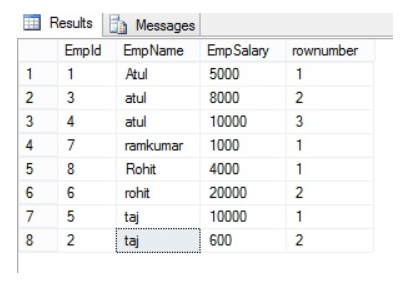

Select *, ROW_NUMBER() over(partition by Empname order by Empname ) as rownumber from EmployeeThe following is the OUTPUT of the above query

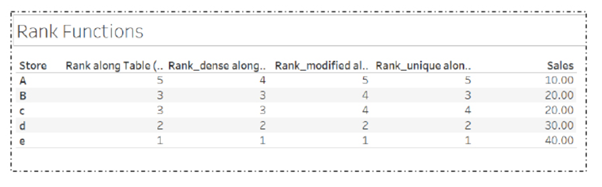

Rank() Function in SQL Server:

This function will assign a unique value to each distinct Row, but it leaves a group between the groups

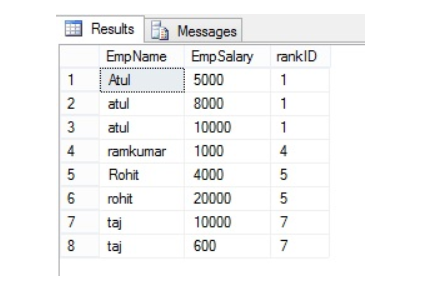

SELECT EmpName, EmpSalary ,rank() over(order by EmpName) as rankID from Employe;The following is the OUTPUT of the above query.

Gap represents number of occurrence example – EmpName=”atul” is repeated 3 times and has rank “1”, the next rank will be 1+3=4 and same with the next value.

Dense_Rank() Function in SQL Server:

Dense_Rank() Function is similar to Rank with only difference, this will not leave gaps between groups.

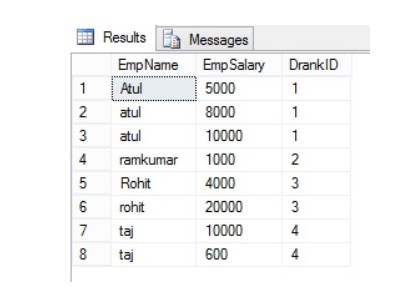

SELECT EmpName ,EmpSalary ,DENSE_RANK() over(order by EmpName) as DrankID from Employee

The following is the OUTPUT of the above query:

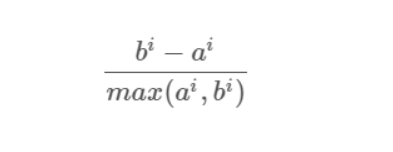

Summery:

42. Explain Normalization and De-Normalization.

Normalization is the process of removing redundant data from the database by splitting the table in a well-defined manner in order to maintain data integrity. This process saves much of the storage space.

De-normalization is the process of adding up redundant data on the table in order to speed up the complex queries and thus achieve better performance.

43. What is a deadlock?

It is an unwanted situation where two or more transactions are waiting indefinitely for one another to release the locks.

44. What is ALIAS command?

This command provides another name to a table or a column. It can be used in WHERE clause of a SQL query using the “as” keyword.

45. What is Datawarehouse?

Datawarehouse is a central repository of data from multiple sources of information. Those data are consolidated, transformed and made available for the mining and online processing. Warehouse data have a subset of data called Data Marts.

46. What is OLTP?

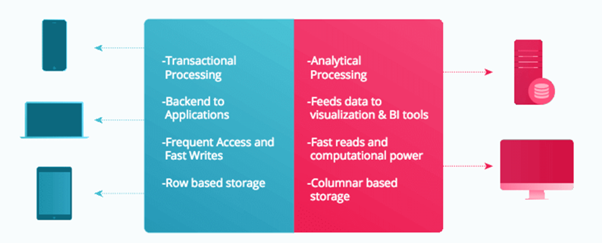

OLTP stands for Online Transaction Processing, is a class of software applications capable of supporting transaction-oriented programs. An essential attribute of an OLTP system is its ability to maintain concurrency. To avoid single points of failure, OLTP systems are often decentralized. These systems are usually designed for a large number of users who conduct short transactions. Database queries are usually simple, require sub-second response times and return relatively few records. Here is an insight into the working of an OLTP system.

47. What are the differences between OLTP and OLAP?

OLTP stands for Online Transaction Processing, is a class of software applications capable of supporting transaction-oriented programs. An important attribute of an OLTP system is its ability to maintain concurrency. OLTP systems often follow a decentralized architecture to avoid single points of failure. These systems are generally designed for a large audience of end users who conduct short transactions. Queries involved in such databases are generally simple, need fast response times and return relatively few records. Number of transactions per second acts as an effective measure for such systems.

OLAP stands for Online Analytical Processing, a class of software programs which are characterized by relatively low frequency of online transactions. Queries are often too complex and involve a bunch of aggregations. For OLAP systems, the effectiveness measure relies highly on response time. Such systems are widely used for data mining or maintaining aggregated, historical data, usually in multi-dimensional schemas.

Reference:-

https://www.mygreatlearning.com

https://www.edureka.co